OpenAI Sora Video Generation App Review – The Future of AI Video Creation

OpenAI’s Sora has become one of the most talked-about new AI tools of 2025. The company behind ChatGPT and DALL·E has now moved into video generation letting users turn text, images, or short clips into completely new video scenes. Sora has been praised for its creativity and realism but has also raised big questions about copyright, control, and privacy. Let’s take a detailed look at what Sora is, how it works, what’s new, and whether it’s worth trying right now.

What Exactly Is Sora?

Sora is a text-to-video model built by OpenAI. You give it a prompt (words, or sometimes images), and it tries to generate a video matching that idea.

Some key specs:

-

It can produce videos in 1080p resolution.

-

The duration is limited (in current use cases, short videos).

-

It can take image or video input and extend scenes or generate new ones.

-

Microsoft integrated Sora into Bing’s video creator, allowing public access (in some regions) to generate short clips based on text prompts.

So, Sora is already not just a lab experiment: it’s being rolled into other products.

What’s New in the Latest Version

The current version, known informally as Sora 2, includes several improvements over the earlier prototype. The upgraded model delivers better motion handling, fewer visual glitches, and improved realism in lighting and camera movements. It can also combine multiple clips or scenes together more smoothly than before.

OpenAI has also promised granular control for copyright owners, meaning creators and studios can choose whether their characters or assets appear in Sora-generated content. This comes after criticism from Hollywood agencies and creators who said the tool could generate videos featuring copyrighted characters without permission.

In addition, OpenAI is planning to merge Sora more closely with ChatGPT, so users will eventually be able to create videos directly from chat conversations. This integration is expected to make video creation even easier for everyday users.

The Popularity Surge

When OpenAI made Sora available to the public through limited invites and app stores, the response was immediate. Within days, the app had surpassed one million downloads and briefly topped the U.S. App Store charts. People on social media began sharing clips showing Sora’s ability to create realistic scenes such as cinematic city shots, nature videos, and even simple storyboards for short films.

This quick adoption shows that there is a massive demand for tools that let anyone create visual content without needing cameras, actors, or editing skills.

Also Read:

- Disney Plus Is Getting Another Price Hike: What It Means for Subscribers

- Spotify Lossless Review: A Great Upgrade With Many Drawbacks

What Sora Does Well

One of the biggest strengths of Sora is speed. It can take a short text prompt and turn it into a visually coherent video within minutes. The results often look impressive with smooth movement, realistic shadows, and natural camera angles.

Another strong point is flexibility. Users can combine text prompts with photos or short clips to guide the AI toward a specific look. For example, someone can upload a still image of a person and tell Sora to animate that person walking through a park.

Sora also allows for a surprising level of creativity. Artists and advertisers are already experimenting with it to create concept videos, animated story ideas, and quick promotional material. Because it runs on OpenAI’s infrastructure, Sora also integrates with other AI tools like DALL·E and ChatGPT for even broader creative possibilities.

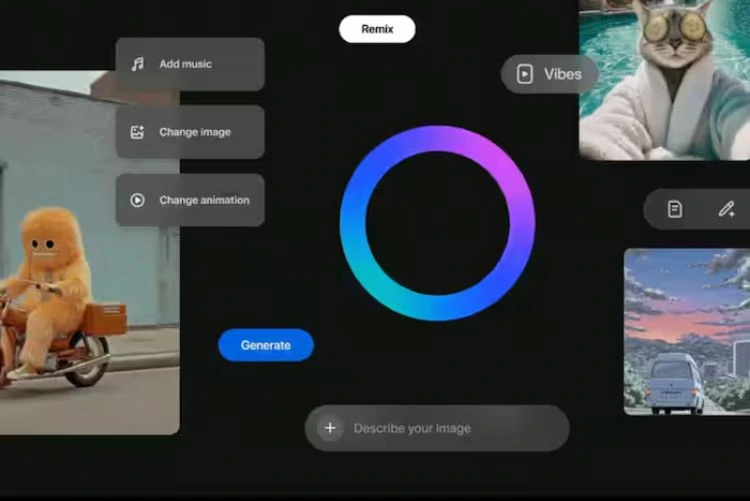

The app’s user interface is simple, making it possible for anyone even people with no editing background to generate a professional-looking video clip.

The Limitations

Even though Sora looks revolutionary, it is far from perfect. The most common issue is visual artifacts, which are small but noticeable glitches in the generated videos. Objects can sometimes merge together, shadows may behave oddly, or the motion of people can look slightly robotic. Researchers have even published studies analyzing these imperfections and ways to detect them automatically.

Another major issue is copyright. Many creators are worried that their existing content might be used in training the model or replicated without permission. Hollywood agencies such as CAA have warned that Sora could pose risks to creative professionals if proper controls are not in place.

There’s also the question of content moderation. OpenAI restricts certain prompt types like violent, adult, or politically sensitive content to prevent misuse. These restrictions are necessary, but they also limit how far creators can experiment within the tool.

The video length is another constraint. Currently, Sora can only generate short clips, not full-length videos or movies. For now, it’s better for short-form content or creative previews rather than full productions.

Finally, cost and computing power are potential challenges. High-quality AI video generation requires heavy GPU processing, which makes it expensive and sometimes slow during peak hours.

Real Use Cases

Despite its limitations, Sora is already being used in interesting ways. Some creators are using it to make short social media clips that mimic TikTok or Instagram Reels style. Marketing teams are using Sora to pitch ideas to clients by showing quick animated mockups. Filmmakers are exploring how it can help visualize storyboards or design shots before filming.

Because Sora accepts both text and image inputs, it’s particularly helpful for designers and advertisers who need to visualize products, packaging, or brand environments quickly.

The Controversy Around Copyright and AI

A big part of the discussion around Sora isn’t technical it’s legal. OpenAI confirmed that its system will require copyright holders to opt out if they don’t want their material to be used in future model training. Critics argue that this approach puts the burden on creators rather than the AI companies.

In October 2025, after several concerns from artists and studios, OpenAI announced new transparency tools for Sora 2, saying they want to give creators “more granular control” over how their works might appear in AI-generated videos. Still, some industry experts remain skeptical, saying enforcement will be difficult.

What’s Next for Sora

-

More granular control for rights holders so they can opt out fully or control usage.

-

Better quality in Sora 2 with fewer glitches, better motion, audio sync.

-

Possibly deeper integration into ChatGPT (so you can generate videos in chat).

-

More monetization or revenue sharing to reward creators whose work is used.

-

Expanded availability globally (right now Sora is mostly in U.S./Canada)

-

Stronger safety filters, moderation to avoid misuse or deepfakes.

Final Thoughts

Sora is one of the most advanced video generation models available today, and it shows just how fast AI technology is evolving. It makes creating short, realistic videos easier than ever before something that used to require expensive equipment and professional editing.

However, it’s still an early-stage product. Glitches, ethical questions, and limited control make it more of an experimental tool than a professional production platform. For now, Sora is best suited for creative exploration, early concept development, and casual fun.

If you’re curious about what the future of video creation looks like, Sora is worth trying. But keep your expectations grounded this is just the beginning of AI video, not the finished product.

![Apple Notes- Must Read To Know Best Additional Feature [2024] Apple Notes](https://mediatalky.com/wp-content/uploads/2024/04/DALL·E-2024-04-13-12.21.50-A-feature-image-for-an-article-about-How-Apple-Notes-Works.-The-image-depicts-a-visually-engaging-modern-workspace-with-an-Apple-iPhone-iPad-and-1-768x439.jpg)

![Crow Country Review: Is This Worth To Play? [2024] Crow Country reviews](https://mediatalky.com/wp-content/uploads/2024/05/Crow-Country-reviews-768x576.jpg)